How a Smart Sitemap Strategy Builds Real Visibility Online

Search engines follow instructions very carefully. They rely on technical signals to decide what to crawl, when to visit again, and where to send users. One small metadata hiccup, and the whole show shifts to the next best domain. That’s where sitemap strategy walks on stage, clutching a clipboard and shouting marching orders at every crawler bot on the planet.

Most folks hear “sitemap” and picture a dusty XML file sitting quietly in a folder. That file speaks directly to Google, Bing, Perplexity, and a few AI-powered friends deciding who gets indexed and who waits in the hallway. A sitemap makes promises. When it overpromises, trust takes a nosedive. When it under-delivers, traffic sneaks out the back.

This guide explains exactly how sitemap signals shape visibility, how bots respond, and how to win their trust back with precision. Expect timestamps, priorities, and robots.

Why Search Engines Care About Sitemap Files

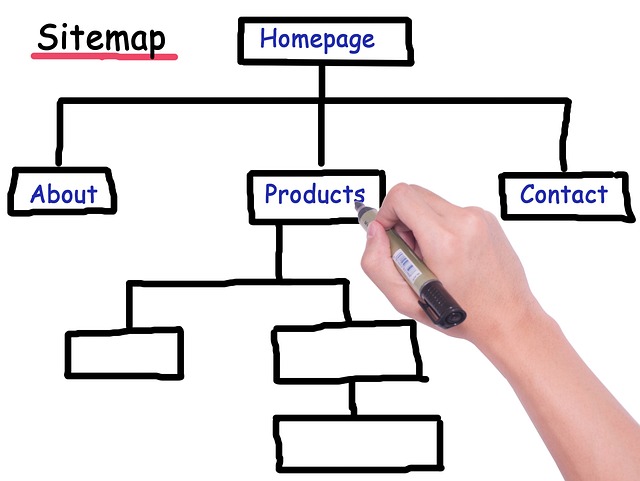

Search engines follow a process that starts with crawling, moves through indexing, and finishes with ranking. Crawlers act like tireless interns who need directions. A sitemap file gives them that exact guidance.

It lists every important URL, shows when pages were last updated, and signals which pages deserve more attention. Bots prefer precision, so when a sitemap says something changed, they expect to see something genuinely new. This file works like a schedule mixed with a to-do list. Crawlers want to spend time wisely, so they rely on sitemaps to point them toward high-value pages.

When the signals match reality, trust builds. They return more often, check pages faster, and prioritise new content. Sitemap strategy handles this coordination clearly. It makes sure the bots stay focused, never wander, and always know where the good stuff lives. Without that structure, crawling slows, and fresh content waits too long for its moment.

What Sitemap Strategy Actually Means

Sitemap strategy might sound like something straight from a developer’s playbook, but it works just like a checklist. Think of it as a smart briefing for search engine bots before they go exploring. It tells them three key things:

- 🧭 Which pages deserve their attention

- 🕒 When those pages last changed

- 📍 Where those pages currently live

Bots treat this information as a schedule. They rely on it to make decisions quickly. Every timestamp, redirect, and priority score gets recorded and remembered. When those signals stay accurate, bots gain confidence. That confidence turns into faster crawling and more frequent visits.

Without strategy, the sitemap becomes a messy menu filled with half-baked dishes and 404 appetisers. Equal priority scores blur what matters. Redirects waste precious crawl time. A smart sitemap strategy clears the table and lays out the meal properly, with zero confusion and no spoiled bananas. 🍌

Steps to Build a Winning Sitemap Strategy

A strong sitemap strategy needs structure, upkeep, and a little common sense. Crawlers rely on clear instructions, and this step-by-step plan ensures they always get the right message. Each part plays a role in building trust, speeding up indexing, and keeping your site visible.

1. 🔍 Audit the current sitemap

Start by pulling the existing sitemap file and running it through a crawler like Screaming Frog or Sitebulb. Look for:

- URLs that return 404 errors

- Links pointing to redirect chains

- Timestamps that don’t reflect actual content changes

- Missing pages like new blog posts, product launches, or updated categories

This check shows exactly where the file needs cleanup or repair.

2. ⚙️ Set up dynamic generation

Static sitemaps go stale fast. Dynamic generation means the sitemap updates automatically whenever content changes. Use plugins for WordPress, enable built-in options, or create a custom script for other platforms.

Make sure this process:

- Adds new content within 24 hours

- Removes deleted or redirected pages

- Reflects true LastModified dates

3. 🎯 Assign smart priorities

Every page carries a priority value between 0.0 and 1.0. These values help bots decide where to focus. Assigning the same score to every page means nothing stands out. Instead, follow a structure:

- Homepage: 1.0

- Core category or collection pages: 0.9

- Product or service pages: 0.7

- Blog posts and resources: 0.6

- Archive and filter pages: 0.4

- Legal and policy pages: 0.3

These values create a clear editorial signal, helping crawlers prioritise properly.

4. 🧩 Segment large sites with sub-sitemaps

A single file filled with thousands of URLs becomes hard to manage and harder for bots to read. A sitemap index that points to several sub-sitemaps keeps things tidy. Segment by type and update rhythm:

- Daily: blog posts or news

- Weekly: product or feature pages

- Monthly: category pages

- Quarterly: static content and legal sections

This separation lets crawlers allocate effort where it matters most.

5. 🧹 Maintain regularly

A great sitemap stays great with care. Build a schedule for routine maintenance:

- Weekly: Scan for new 404s, redirects, or errors

- Monthly: Refresh dynamic generation settings and test coverage

- Quarterly: Review crawl stats in Search Console, check indexing speeds, and resubmit sitemaps if needed

Maintenance protects trust, avoids crawl budget waste, and keeps indexing performance consistent.

💥 With everything working together, your sitemap becomes a crawler’s best mate. Search engines respond with faster discovery, higher crawl frequency, and better visibility across the board.

Redirects, 404s, and the Crawl Budget Drain

Crawlers have a daily limit, like a bot-friendly step count. Every redirect burns through that allowance. When a sitemap points to an old URL, bots land there, get redirected, follow the chain, and only then see the actual content. That’s three crawl requests spent on one destination.

Now multiply that across hundreds of outdated links. Crawl budgets vanish faster than biscuits in a break room. Bots might skip newer content because older errors used up their attention span.

Here’s where smart sitemap strategy makes a difference:

- 🔗 Redirects get replaced with final destinations

- 🚫 404s get removed before bots waste time

- 🧽 Files get cleaned so no dead ends stay behind

With regular health checks, sitemaps stay sharp. Crawlers reach fresh content quicker, follow fewer loops, and trust the domain more. A clean sitemap feels like a good satnav, with clear directions, no sudden detours, and zero “recalculating” drama. 🛰️

Why Freshness Signals Matter to Crawlers

Timestamps shout, “Something changed!” Bots respond quickly. When a sitemap claims hundreds of pages updated yesterday, bots expect fresh content. If they keep finding the same old thing, trust drops.

Many CMS systems update timestamps automatically. A single login can trigger thousands of false freshness signals. Over time, bots notice the pattern. They adapt by reducing crawl frequency.

This causes delays. New content sits in the waiting room while bots focus on sites that deliver when they say they will. Real freshness deserves real timestamps. That’s how sitemap strategy speeds up discovery.

How Priority Values Shape Crawl Behaviour

Sitemaps allow each page to carry a priority score from 0.0 to 1.0. This helps search engines decide which URLs deserve more attention.

Setting everything to 0.5 sends mixed signals. It says the homepage carries the same weight as an outdated blog post or a forgotten privacy policy.

Strong sitemap strategy uses clear tiers. Top pages might hold a 1.0 score, while evergreen content holds steady around 0.6. Legal pages live comfortably at 0.3. Bots understand this structure and act accordingly.

Organising Big Sites With Sub-Sitemaps

Big sites come with big responsibilities, and even bigger crawl budgets to protect. A single XML file listing every URL creates digital chaos. It forces bots to wade through a swamp of mixed priorities, which slows everything down. Crawlers like clarity, so it helps to split things into neat, logical parts.

That’s where sub-sitemaps step in. A sitemap index acts like a contents page. It points to smaller files that each serve a different purpose. This setup helps bots focus on fresh content more often, while less active pages receive attention on a quieter schedule.

Here’s an example that keeps everything tidy:

| 🗂️ Sitemap File | 🕒 Update Frequency | 📌 Example Content |

| sitemap-blog.xml | Daily | New articles and insights |

| sitemap-products.xml | Weekly | Product and service pages |

| sitemap-categories.xml | Monthly | Collections and categories |

| sitemap-static.xml | Quarterly | About, contact, legal pages |

Bots love patterns. When they see regular updates in some files and rare ones in others, they optimise their visits. That means faster indexing for time-sensitive content and better resource use for the whole site. It also means less wasted energy on pages that rarely change. 💡

What Tools Help With Dynamic Sitemap Strategy

Dynamic sitemap updates rely on tools that know how to stay tidy without constant supervision. The goal is to reflect real content changes, remove redirects, and serve bots clean, honest information. Here’s how to build that setup step by step:

- Install a reliable plugin

Many website platforms support plugins or built-in tools that automatically create and update sitemaps when content changes. These tools generate accurate timestamps, include new pages quickly, and tidy up redirects without fuss. - Use built-in features where available

Some platforms manage sitemap creation directly, especially for standard themes or templates. With the right settings enabled, dynamic sitemaps stay current without lifting a finger. - Write a custom script for your CMS

Custom platforms may need a scheduled task or cron job that pulls fresh URLs from the database each day. This script should check for deleted pages, apply real timestamps, and remove redirect sources. - Run daily regeneration

A daily sitemap refresh keeps crawlers in sync. Every new product, blog post, or landing page appears fast, with signals bots trust. - Think of it like housework 🧹

These tools do the digital vacuuming. They sweep away bad links, dust off the updates, and polish the path for every visitor, whether human or bot.

How Crawl Budget Allocation Really Works

Crawlers do not visit every page equally. They assign a budget based on trust, freshness, and performance.

If a sitemap wastes their time once, they remember. If it happens repeatedly, they scale back. Over time, crawl limits shrink.

That means fewer crawled pages each day. New content sits in queue. Sitemaps that send reliable signals see higher crawl rates.

Trust builds through clear timestamps, clean redirects, accurate priorities, and fresh content. Sitemap strategy manages all four.

How Proper Sitemaps Improve Indexing Speed

Speed changes everything. When crawlers discover new content quickly, those pages reach the index faster. Fast indexing attracts traffic earlier, collects backlinks sooner, and builds stronger authority with far less effort. Proper sitemap strategy fuels that acceleration.

A structured sitemap gives crawlers a clear, trusted source of truth. Pages marked with accurate timestamps and strategic priorities rise to the top of the crawl queue. Redirects and 404s stay out of the way, so nothing slows the process.

Here’s what happens when the system works correctly:

- 🚀 Full crawl completed within five days

Bots access every listed page, without delay or confusion. - 🧾 Indexing finalised in under a week

Pages show up in search results almost as fast as they go live. - 📣 Faster engagement on new topics

Early visibility helps build authority before competitors catch up.

That fast-track effect creates momentum. Bots return more often. Rankings improve steadily. Pages earn more attention across AI-powered services. Meanwhile, slow sitemaps keep others waiting quietly in the queue.⏳

Measure Sitemap Efficiency Like a Pro

Great sitemap strategy shows up in the numbers. Google Search Console offers a peek behind the scenes, where real crawling and indexing decisions happen. Understanding what those numbers mean helps reveal how well the sitemap supports overall visibility.

Keep an eye on these core metrics:

- ✅ Submitted-to-indexed ratio

This shows how many submitted pages actually made it into the index. A strong ratio sits above 80%, showing search engines believe the signals. - 📈 Crawl stats for daily requests

Higher numbers suggest bots visit more frequently. That often means they trust the sitemap and expect real updates. - ⏳ Discovered – currently not indexed

These are pages crawlers found but held back from indexing. Too many here signals that something in the crawl-to-index process needs adjusting.

Regular audits help keep things sharp. SEO tools can scan sitemap files, track errors, and highlight outdated entries. These tools give you exact problems to fix, often before they affect rankings. 😎

New Content Needs Fast Discovery

Publishing content without updating the sitemap is like opening a new shop without telling anyone. Bots will eventually find it, but it takes time. That delay means other pages already collect traffic and trust.

With dynamic sitemap generation, every new post enters the queue within 24 hours. Bots show up faster. Indexing happens sooner. Visibility increases.

Speed matters. The faster content enters the index, the quicker it gains traction. Sitemap strategy ensures zero lag between hitting “publish” and triggering a crawl.

What Happens When Bots Trust Your Signals

When bots trust your sitemap, everything moves faster.That trust comes from clear, honest signals. Sitemap strategy tells bots where to go, when to visit, and what matters most.

- 🚀 New pages show up in search within days

- 📈 Older pages climb higher with less work

- 🤖 AI tools like ChatGPT and Google Overviews start showing your content

Pages found early collect better links, more clicks, and stronger search presence, but clean signals lead to quick results.

Why Infrastructure Shapes SEO Success First

Content brings the charm, but sitemaps hold the keys. Without a clear signal, even the best article ends up waiting behind a closed door. Sitemap strategy makes sure that door swings open fast, with a polite little wave to every passing bot.

Crawlers follow order. They like clean structure, honest timing, and pages that tell the truth about their updates. When that foundation stays solid, engines respond with quicker visits, faster indexing, and stronger rankings. Trust grows from consistency, and authority builds on top of that.

The real magic? Sitemap quality keeps giving. One clean file and a regular update routine can spark long-term gains that multiply quietly in the background. Every visit gets easier. Every new page gets seen sooner. And the content finally lands where it belongs.